Framework for evaluating variant detection methods: comparison of aligners and callers

Variant detection and grading overview

Developing pipelines for detecting variants from high throughput sequencing data is challenging due to rapidly changing algorithms and relatively low concordance between methods. This post will discuss automated methods providing evaluation of variant calls, enabling detailed diagnosis of discordant differences between multiple calling approaches. This allows us to:

- Characterize strengths and weaknesses of alignment, post-alignment preparation and calling methods.

- Automatically verify pipeline updates and installations to ensure variant calls recover expected variations. This extends the XPrize validation protocol to provide full summary metrics on concordance and discordance of variants.

- Make recommendations on best-practice approaches to use in sequencing studies requiring either exome or whole genome variant calling.

- Identify characteristics of genomic regions more likely to have discordant variants which require additional care when making biological conclusions based on calls, or lack of calls, in these regions.

This evaluation work is part of a larger community effort to better characterize variant calling methods. A key component of these evaluations is a well characterized set of reference variations for the NA12878 human HapMap genome, provided by NIST’s Genome in a Bottle consortium. The diagnostic component of this work supplements emerging tools like GCAT (Genome Comparison and Analytic Testing), which provides a community platform for comparing and discussing calling approaches.

I’ll show a 12 way comparison between 2 different aligners (novoalign and bwa mem), 2 different post-alignment preparation methods (GATK best practices and the Marth lab’s gkno pipeline), and 3 different variant callers (GATK UnifiedGenotyper, GATK HaplotypeCaller, and FreeBayes). This allows comparison of openly available methods (bwa mem, gkno preparation, and FreeBayes) with those that require licensing (novoalign, GATK’s variant callers). I’ll also describe bcbio-nextgen, the fully automated open-source pipeline used for variant calling and evaluation, which allows others to easily bring this methodology into their own work and extend this analysis.

Aligner, post-alignment preparation and variant calling comparison

To compare methods, we called variants on a NA12878 exome dataset from EdgeBio’s clinical pipeline and assessed them against the NIST Genome in a Bottle reference material. Discordant positions where the reference and evaluation variants differ fall into three different categories:

- Extra variants, called in the evaluation data but not in the reference. These are potential false positives.

- Missing variants, found in the NA12878 reference but not in the evaluation data set. These are potential false negatives. The use of high quality reference materials from NIST enables identification of genomic regions we fail to call in.

- Shared variants, called in both the evaluation and reference but differently represented. This could result from allele differences, such as heterozygote versus homozygote calls, or variant identification differences, such as indel start and end coordinates.

To further identify causes of discordance, we subdivide the missing and extra variants using annotations from the GEMINI variation framework:

- Low coverage: positions with limited read coverage (4 to 9 reads).

- Repetitive: regions identified by RepeatMasker.

- Error prone: variants falling in motifs found to induce sequencing errors.

We subdivide and restrict our comparisons to help identify sources of differences between methods indistinguishable when looking at total discordant counts. A critical subdivison is comparing SNPs and indels separately. With lower total counts of indels but higher error rates, each variant type needs independent visualization. Secondly, it’s crucial to distinguish between discordance caused by a lack of coverage, and discordance caused by an actual difference in variant assessment. We evaluate only in callable regions with 4 or more reads. This low minimum cutoff provides a valuable evaluation of low coverage regions, which differ the most between alignment and calling methods.

I’ll use this data to provide recommendations for alignment, post-alignment preparation and variant calling. In addition to these high level summaries, the full dataset and summary plots available below providing a starting place for digging further into the data.

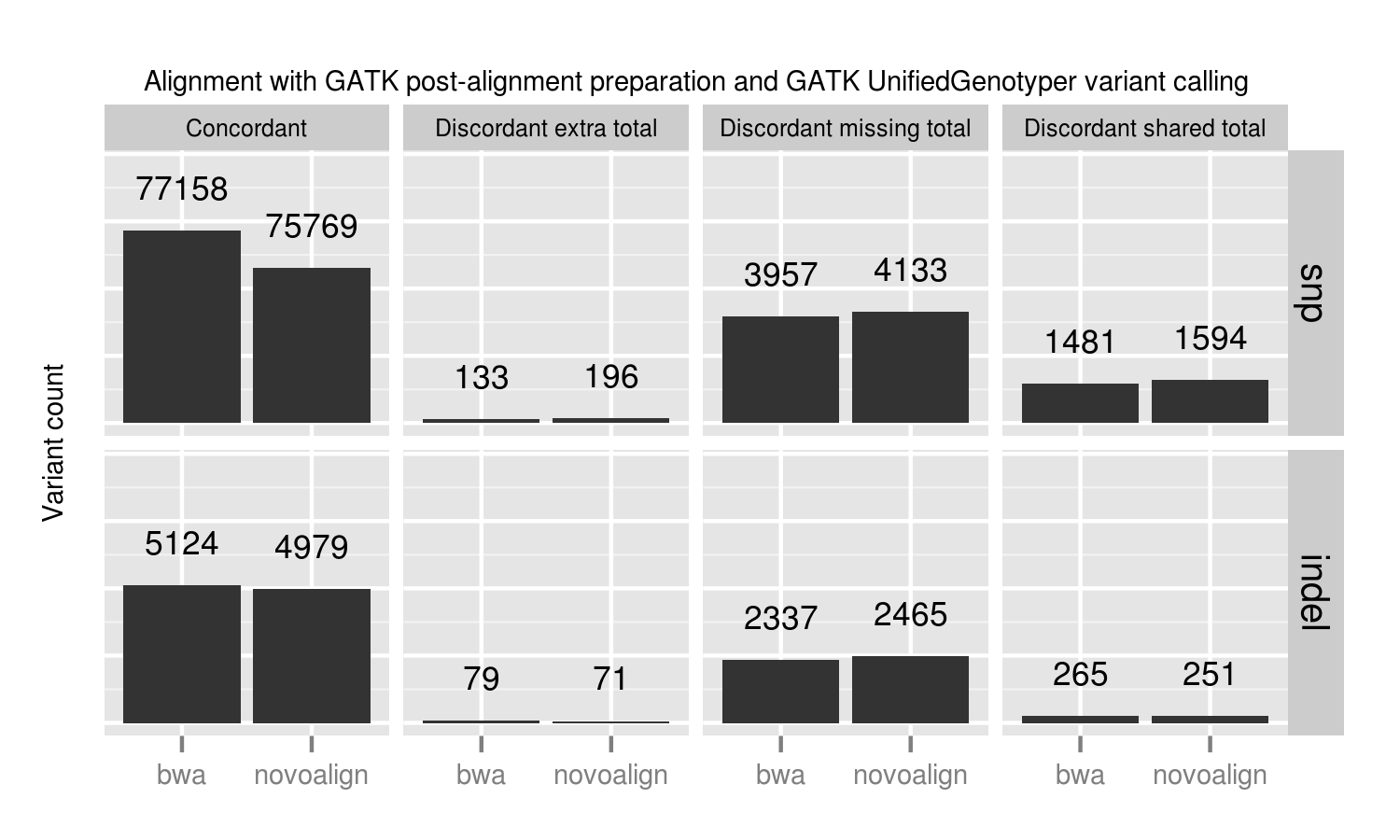

Aligners

We compared two recently released aligners designed to work with longer reads coming from new sequencing technologies: novoalign (3.00.02) and bwa mem (0.7.3a). bwa mem identified 1389 additional concordant SNPs and 145 indels not seen with novoalign. 1024 of these missing variants are in regions where novoalign does not provide sufficient coverage for calling. Of those, 92% (941) have low coverage with less than 10 reads in the bwa alignments. Algorithmic changes impact low coverage regions more due to the decreased evidence and susceptibility to crossing calling coverage thresholds, so we need extra care and consideration of calls in these regions.

Our standard workflow uses novoalign based on its stringency in resolving large insertions and deletions. These results suggest equally good results using bwa mem, along with improved processing times. One caveat to these results is that some of the available Illumina call data that feeds into NIST’s reference genomes comes from a bwa alignment, so some differences may reflect a bias towards bwa alignment heuristics. Using non-simulated reference data sets has the advantage of capturing real biological and process errors, but requires iterative improvement of the reference materials to avoid this type of potential algorithmic bias.

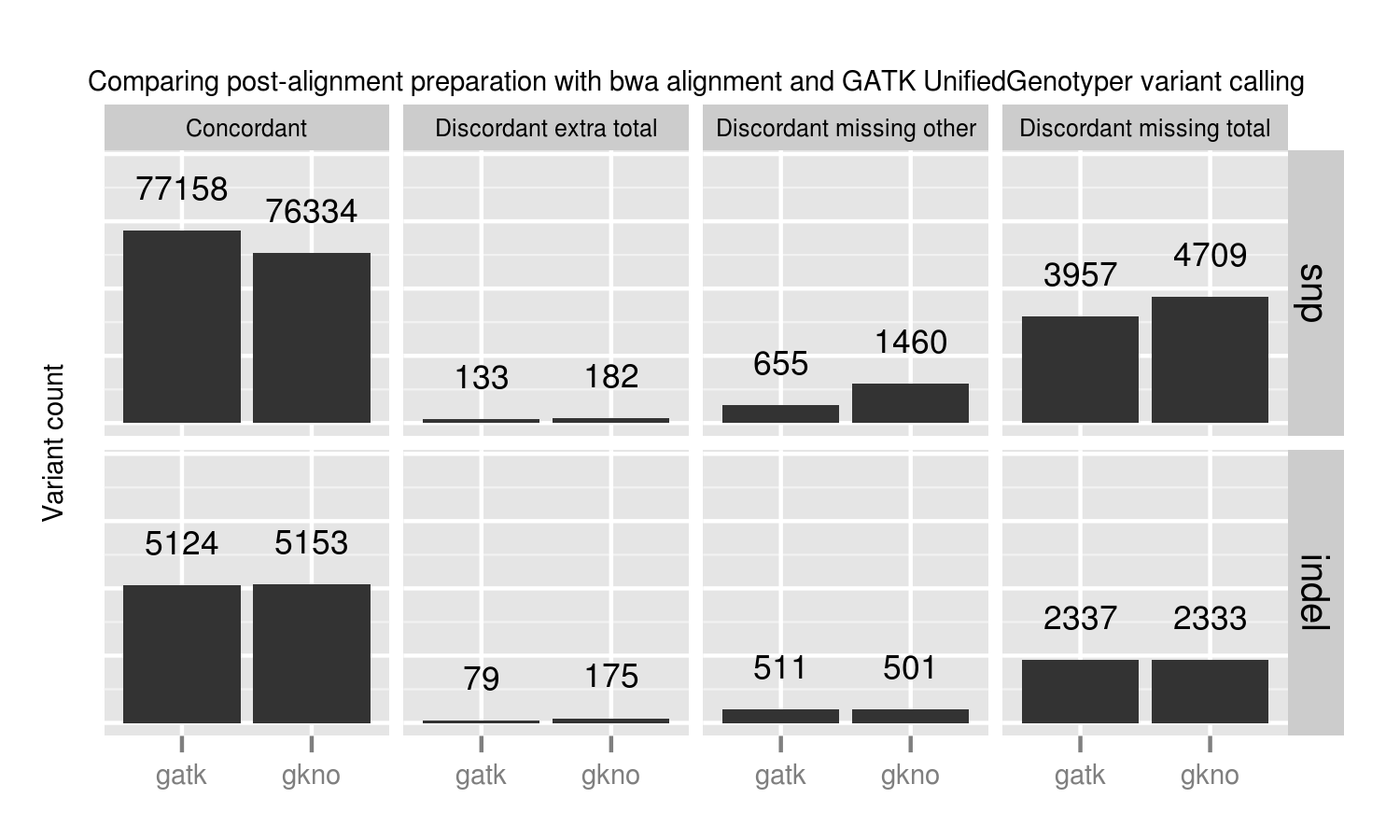

Post-alignment preparation and quality score recalibration

We compared two methods of quality recalibration:

- GATK’s best practices (2.4-9): This involves de-duplication with Picard MarkDuplicates, GATK base quality score recalibration and GATK realignment around indels.

- The Marth Lab’s gkno realignment pipeline: This performs de-duplication with samtools rmdup and realignment around indels using ogap. All commands in this pipeline work on streaming input, avoiding disk IO penalties by using unix pipes. This piped approach improves scaling on large numbers of whole genome samples. Notably, our implementation of the pipeline does not use a base quality score recalibration step.

GATK best practice pipelines offer an advantage over the gkno-only pipeline primarily because of improvements in SNP calling from base quality recalibration. Specifically we lose ~1% (824 / 77158) of called variations. These fall into the discordant missing “other” category, so we cannot explain them by metrics such as coverage or genome difficulty. The simplest explanation is that initial poor quality calculations in those regions result in callers missing those variants. Base quality recalibration helps recover them. These results match Brendan O’Fallon’s recent analysis of base quality score recalibration.

This places a practical number on the lost variants when avoiding recalibration either due to scaling or GATK licensing concerns. Some other options for recalibration include Novoalign’s Quality Recalibration and University of Michigan’s BamUtil recab, although we’ve not yet tested either in depth as potential supplements to improve calling in non-GATK pipelines.

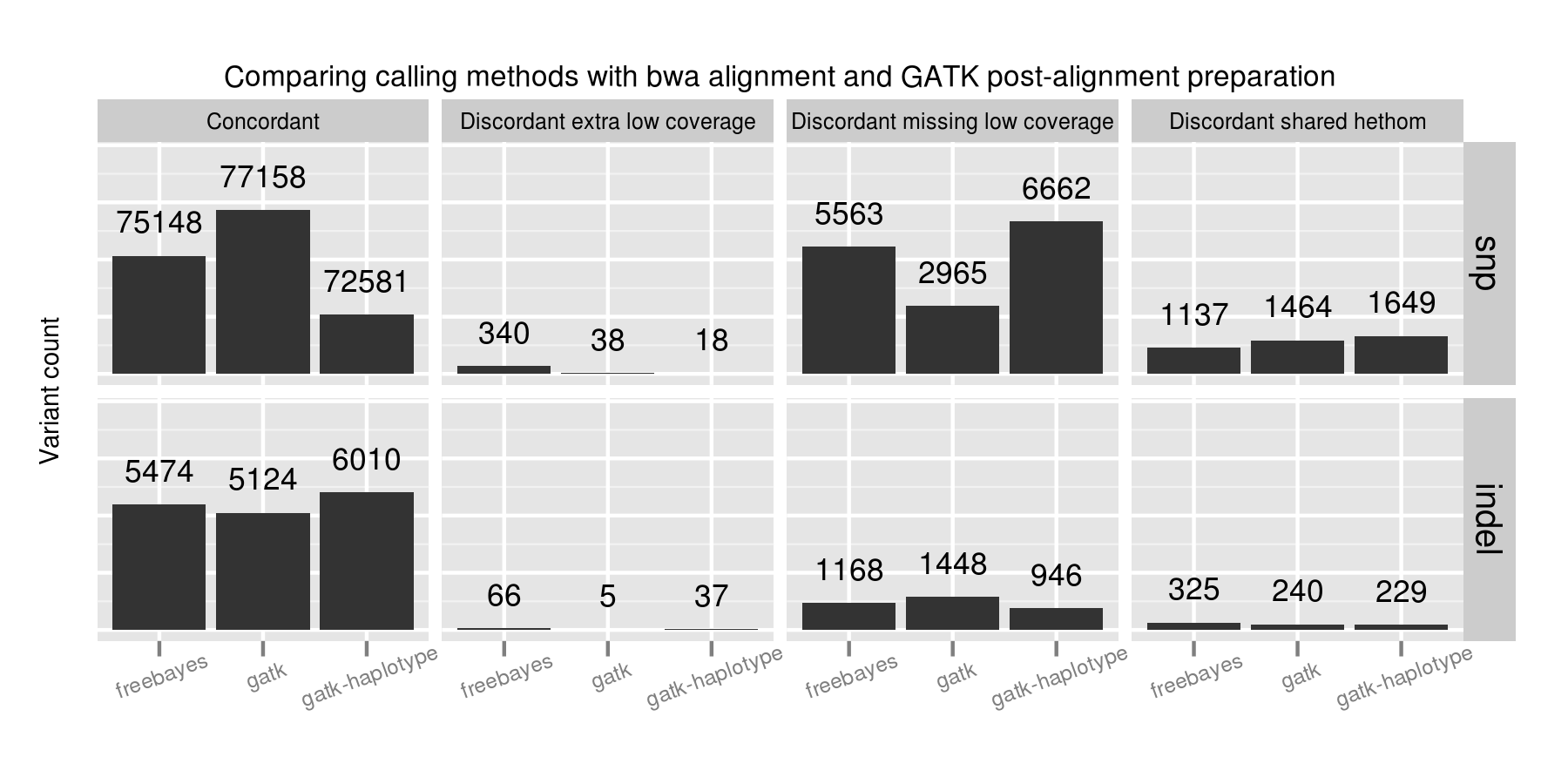

Variant callers

For this comparison, we used three general purpose callers that handle SNPs and small indels, all of which have updated versions since our last comparison:

- FreeBayes (0.9.9 296a0fa): A haplotype-based Bayesian caller from the Marth Lab, with filtering on quality score and read depth.

- GATK UnifiedGenotyper (2.4-9): GATK’s widely used Bayesian caller, using filtering recommendations for exome experiments from GATK’s best practices.

- GATK HaplotypeCaller (2.4-9): GATK’s more recently developed haplotype caller which provides local assembly around variant regions, using filtering recommendations for exomes from GATK’s best practices.

Adjusting variant calling methods has the biggest impact on the final set of calls. Called SNPs differ by 4577 between the three compared approaches, in comparison with aligner and post-alignment preparation changes which resulted in a maximum difference of 1389 calls. This suggests that experimenting with variant calling approaches currently provides the most leverage to improve calls.

A majority of the SNP concordance differences between the three calling methods are in low coverage regions with between 4 and 9 reads. GATK UnifiedGenotyper performs the best in detecting SNPs in these low coverage regions. FreeBayes and GATK HaplotypeCaller both call more conservatively in these regions, generating more potential false negatives. FreeBayes had the fewest heterozygote/homozygote discrimination differences of the three callers.

For indels, FreeBayes and HaplotypeCaller both provide improved sensitivity compared to UnifiedGenotyper, with HaplotypeCaller identifying the most, especially in low coverage regions. In contrast to the SNP calling results, FreeBayes has more calls that match the expected indel but differ in whether a call is a heterozygote or homozygote.

No one caller outperformed the others on all subsets of the data. GATK UnifiedGenotyper performs best on SNPs but is less sensitive in resolving indels. GATK HaplotypeCaller identifies the most indels, but is more conservative than the other callers on SNPs. FreeBayes provides intermediate sensitivity and specificity between the two for both SNPs and indels. A combined UnifiedGenotyper and HaplotypeCaller pipeline for SNPs and indels, respectively, would provide the best overall calling metrics based on this set of comparisons.

Low coverage regions are the key area of difference between callers. Coupled with the alignment results and investigation of variant changes resulting from quality score binning, this suggests we should be more critical in assessing both calls and coverage in these regions. Assessing coverage and potential false negatives is especially critical since we lack good tools to summarize and prioritize genomic regions that are potentially missed during sequencing. This also emphasizes the role of population-based calling to help resolve low coverage regions, since callers can use evidence from multiple samples to better estimate the likelihoods of low coverage calls.

Automated calling and grading pipeline

Method comparisons become dated quickly due to the continuous improvement in aligners and variant callers. While these recommendations are useful now, in 6 months there will be new releases with improved approaches. This rapid development cycle creates challenges for biologists hoping to derive meaning from variant results: do you stay locked on software versions whose trade offs you understand, or do you attempt to stay current and handle re-verifying results with every new release?

Our goal is to provide a community developed pipeline and comparison framework that ameliorates this continuous struggle to re-verify. The analysis done here is fully automated as part of the bcbio-nextgen analysis framework. This framework code provides full exposure and revision tracking of all parameters used in analyses. For example, the ngsalign module contains the command lines used for bwa mem and novoalign, as well as all other tools.

To install the pipeline, third-party software and required data files:

wget https://raw.github.com/chapmanb/bcbio-nextgen/master/scripts/bcbio_nextgen_install.py python bcbio_nextgen_install.py /usr/local /usr/local/share/bcbio-nextgen

The installer bootstraps all installation on a bare machine using the CloudBioLinux framework. More details and options are available in the installation documentation.

To re-run this analysis, retrieve the input data files and configuration as described in the bcbio-nextgen example documentation with:

$ mkdir config && cd config $ wget https://raw.github.com/chapmanb/bcbio-nextgen/master/config/\ examples/NA12878-exome-methodcmp.yaml $ cd .. && mkdir input && cd input $ wget https://dm.genomespace.org/datamanager/file/Home/EdgeBio/\ CLIA_Examples/NA12878-NGv3-LAB1360-A/NA12878-NGv3-LAB1360-A_1.fastq.gz $ wget https://dm.genomespace.org/datamanager/file/Home/EdgeBio/\ CLIA_Examples/NA12878-NGv3-LAB1360-A/NA12878-NGv3-LAB1360-A_2.fastq.gz $ wget https://s3.amazonaws.com/bcbio_nextgen/NA12878-nist-v2_13-NGv3-pass.vcf.gz $ wget https://s3.amazonaws.com/bcbio_nextgen/NA12878-nist-v2_13-NGv3-regions.bed.gz $ gunzip NA12878-nist-*.gz $ wget https://s3.amazonaws.com/bcbio_nextgen/NGv3.bed.gz $ gunzip NGv3.bed.gz

Then run the analysis, distributed on 8 local cores, with:

$ mkdir work && cd work $ bcbio_nextgen.py bcbio_system.yaml ../input ../config/NA12878-exome-methodcmp.yaml -n 8

The bcbio-nextgen documentation describes how to parallelize processing over multiple machines using cluster schedulers (LSF, SGE, Torque).

The pipeline and comparison framework are open-source and configurable for multiple aligners, preparation methods and callers. We invite anyone interested in this work to provide feedback and contributions.

Full data sets

We extracted the conclusions for alignment, post-alignment preparation and variant calling from analysis of the full dataset. The visualizations for the full data are not as pretty but we make them available for anyone interested in digging deeper:

- Summary CSV of comparisons split by methods and concordance/discordance types, easily importable into R or pandas for further analysis.

- Code for preparing and plotting results

- Full comparisons of all 12 methods, stratified by concordance and discordance: SNPs and indels

- Boxplots of differences between alignment methods: SNPs and indels

- Boxplots of differences between post-alignment preparation methods: SNPs and indels

- Boxplots of differences between variant calling methods: SNPs and indels

The comparison variant calls are also useful for pinpointing algorithmic differences between methods. Some useful subsets of variants:

- Concordant variants called by bwa and not novoalign, where novoalign did not have sufficient coverage in the region. These are calls where either novoalign fails to map some reads, or bwa maps too aggressively: VCF of bwa calls with low or no coverage in novoalign.

- Discordant variants called consistently by multiple calling methods. These are potential errors in the reference material, or consistently problematic calling regions for multiple algorithms. Of the 9004 shared discordants, the majority are potential false negatives not seen in the evaluation calls (7152; 79%). Another large portion is heterozygote/homozygote differences, which make up 1627 calls (18%). 6652 (74%) of the differences have low coverage in the exome evaluation, again reflecting the difficulties in calling in these regions. The VCF of discordants found in 2 or more callers contains these calls, with a ‘GradeCat’ INFO tag specifying the discordance category.

We encourage reanalysis and welcome suggestions for improving the presentation and conclusions in this post.

Thanks to everyone who submitted feedback on the earlier draft I posted in response to a discussion on twitter. Since they are anonymous comments I can’t respond directly but incorporated much of the feedback into the post. Some additional details on these questions:

– What interval regions do you use? I included the BED file from the NimbleGen SeqCapEZ exome library, version 3 (http://www.nimblegen.com/exomev3launcheq/) used. I do restrict to targeted capture regions.

– Suggestion to visualize with Venn diagrams: GCAT does a great job of presenting the data this way for multiple comparisons. My goal here is to complement their work with this more in-depth exploration of discordants. Longer term I’m hoping to integrate more directly with them as a community resource.

– Suggestion for post-calling imputation on normalizing caller differences: Thanks for the useful suggestion. I’m not an expert on imputation methods but would be happy to work with someone on this. Another related idea is calling with a background population to help inform single sample calling. FreeBayes provides this with the `–variant-input` parameter.

Happy to discuss any of these ideas more in the comments.

Brad Chapman

May 6, 2013 at 9:03 am

Very nice!

Mark DePristo

May 6, 2013 at 10:40 am

Hi Brad,

Thanks for posting this information. My group is always wrestling with the issues of coming up with any data set that we have some validated variants for.

I tried poking around on the genome in a bottle website that you pointed to. I gather that the idea is to identify good samples with high levels of validated variation for the community to use to calibrate….well…everything! Unfortunately, I can’t find a link on their website where I could ask my question so I’ll try asking here instead….

The data set you used is a cell line with a number of validated variants. Do you know if there is a way to find similar genome data sets? On the genome in a bottle website there is a link that points to lots of slides about what a great data set would look like, but I can’t seem to find a summary of the available data sets.

How did you learn about the exome set that you used? Can you point me in the right direction?

thanks

RIchard

Richard

May 6, 2013 at 4:46 pm

Richard;

Thanks for all the helpful feedback. The short answer to your question is that developing resources like you describe is still a work in progress and is the goal of these projects. There isn’t yet a one-stop shop for validated data sets along with protocols to analyze them. That’s one of the areas this post and development work is trying to address.

Community projects like Genome in a Bottle, GCAT and Seqbench (http://seqbench.org/) are some places to watch. It’s exciting that there is a lot of interest in developing these validation approaches, so hopefully the situation for public validation data sets will improve quickly.

Brad Chapman

May 8, 2013 at 7:00 am

Very nice summary of some of the most up to date tools. The tool set looks to be very valuable for new groups wanting to validate their internal validation runs with NA12878.

Jon Keats

May 11, 2013 at 8:13 pm

Thanks Brad for this great article.

I was asking myself about the quality of variants called on repetitive regions and/or sequencing error prone motifs. Did you find any correlation with discordant calls as you did for low coverage regions?

Pablo

May 16, 2013 at 4:15 am

Pablo;

Thanks much for the feedback. The full comparison plots in the last section contain the results split by error prone and repeat regions. Low coverage discordants dominate relative to these, which is why I focused on them in the post. Error prone regions did not appear to have much predictive power, but repeat regions show some useful differences between callers. Specifically, missed discordant calls (potential false negatives) are higher for GATK UnifiedGenotyper and HaplotypeCaller than FreeBayes. However, repeat regions are notoriously difficult to deal with because of representation issues, so I was hoping to dig into these more before drawing any conclusions.

Brad Chapman

May 16, 2013 at 2:00 pm

Great! Thanks again.

Pablo

May 17, 2013 at 3:18 am

The NIST alignment was done with bwa-backtrack, the original algorithm. Bwa-mem is a distinct algorithm in most aspects, from seeding to SW extension to the pairing strategy. Nonetheless, as long as I wrote both algorithms, the argument that the evaluation biases towards bwa-mem is still true. At least on simulated data, novoalign still seems more accurate.

Heng

May 16, 2013 at 8:46 pm

Heng;

Great point, thank you for clarifying my brainstorming on potential biases. We’re looking at the comparison with Colin from Novocraft and the major difference appears to be in soft clipping. The novoalign default is currently more stringent than bwa-mem. I’ll write an updated post with these results. Thanks for the thoughts, bwa-mem and all your work.

Brad Chapman

May 17, 2013 at 9:42 am

I would imagine that the NIST materials would be problematic for this kind of comparison due to their extremely heavy reliance on the GATK. Do you have an example based on a context where the genomic state is independently known, either through simulation or non-NGS methods?

In the case of simulated data, I have observed a surprisingly large false negative rate for the GATK methods, which you allude to in comments. This effect should be minimized here, because the basis for comparison is GATK.

Erik Garrison

October 2, 2013 at 9:04 am

Erik;

Absolutely, I’m looking for ways to reduce the GATK-bias in the reference materials. It would be useful to be able to identify specific regions where it looks like GATK doesn’t do as well as FreeBayes or other callers. I know Justin Zook at NIST would definitely be happy to get this kind of feedback.

I haven’t used simulated datasets but it would be great to include as part of the comparisons. Do you have any standard simulated benchmarks with known VCFs that we could incorporate?

Brad Chapman

October 2, 2013 at 2:27 pm

Hi Brad, are the bam files produced in the analysis available anywhere?

Albert.

albert vilella

February 24, 2014 at 11:22 am

Albert;

Sorry I don’t have those available on S3, but the full instructions for running the pipeline and generating them are here:

https://bcbio-nextgen.readthedocs.org/en/latest/contents/testing.html#exome-with-validation-against-reference-materials

Hope that helps some.

Brad Chapman

February 25, 2014 at 8:52 pm

Hi Brad! Great work. Very helpful and insightful.

I was wondering from where/how did you get the GIAB exome variants? I could not find a direct link for the variants falling in the exomes of NA12878 from GIAB website. I need those variants for evaluating my exome-variants calling pipeline.

Thanks!

Nitin

February 1, 2016 at 10:54 am

Nitin;

Thanks much, very glad this is helpful. There is no special download for exome vairants from GiaB. It is a single distribution for all the whole genome variants (ftp://ftp.ncbi.nlm.nih.gov/giab/ftp/release/NA12878_HG001/NISTv2.19/). I intersect the BED files of trusted regions from GiaB with the BED file of targets from the exome capture kit of interest. This creates the regions to assess and then you can either subset the VCF files by them for comparison, or use a caller that handles this directly. For comparisons, we use rtg vcfeval (https://github.com/RealTimeGenomics/rtg-tools) which can take the intersected BED file directly along with the original GiaB VCF and your VCF file of calls. Hope this helps.

Brad Chapman

February 2, 2016 at 7:35 pm

Thanks, Brad! This is very helpful.

Nitin

February 3, 2016 at 9:55 am